The moment I started working in data centers, I heard the word “load balancer”. While the term itself is simple and explanatory, the role of a load balancer in a network is sometimes unclear. Thus, in this article, we give you a complete overview of load balancing. We will present all the different uses for this powerful device, and where to use it. After this reading, you will know if studying load balancers is worth for you.

What is a Load Balancer?

First, we can start with a simple definition: a load balancer is a network device that distributes traffic to other devices. Before we dive any further, we need to understand that distribution is intelligent. Any network device, like a router or a switch, distributes traffic to other devices. However, a load balancer performs advanced checks even at the application layer.

A load balancer is a network device distributing traffic to other devices.

All of this may sound abstract, but an example will make things clear. Imagine you want to publish a website in high availability, and so you need to use two servers at least. You install the web server software on both devices and make it run the same website. So far so simple, but the user will point at a single website, say example.com. How do we direct users to a server or another in a transparent fashion? You guessed it, with a load balancer. The load balancer polls the servers to see if they are healthy and distribute the requests to them. If a server fails, the balancer will move all the traffic to the other. Furthermore, you can do advanced configurations like selecting the least-loaded server dynamically.

How all of this is possible, in practice? We use the principle of reverse proxy, just read the next section…

What is a Reverse Proxy?

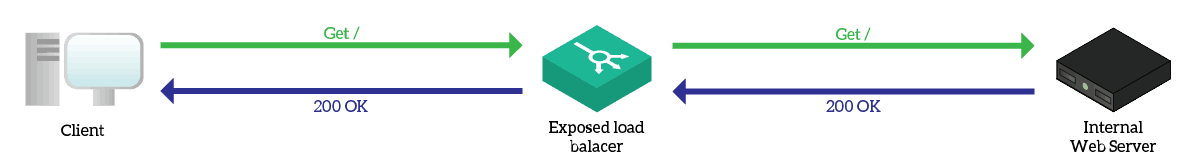

In the previous article, we explained what is a proxy: a device you ask to make requests on your behalf. A reverse proxy is a similar technology, but works in the other way. With a “normal” proxy, you contact the proxy consciously, and ask it to make requests. Instead, with a reverse proxy you believe you are contacting the external server directly. In reality, you are contacting the reverse proxy server, that pretends to be the external server. The reverse proxy will then make another request to the real server on your behalf, and after that respond to your request.

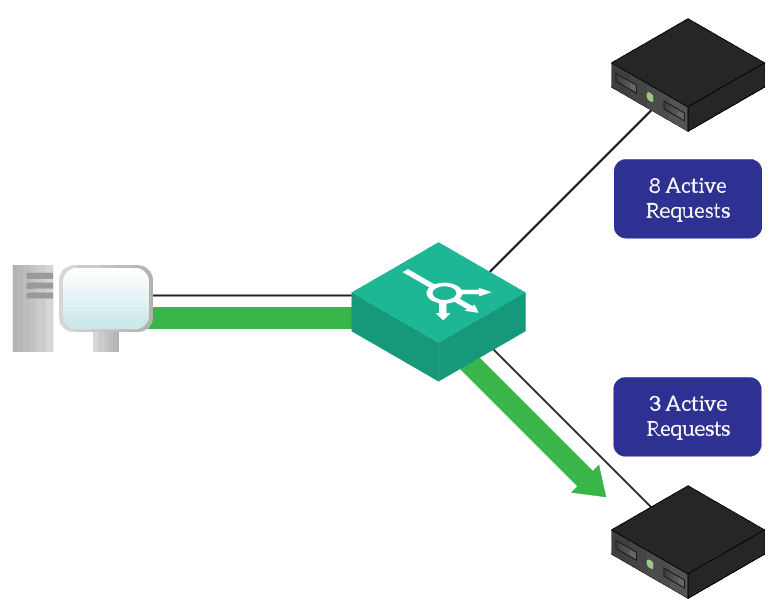

A reverse proxy will keep your request on hold while obtaining the response from the real server. Since the reverse proxy opens your request and read its content, it can intelligently decide which server to contact. Furthermore, since requests pass through the reverse proxy, it can count how many requests are active on each server. This allows the reverse proxy to know which server is the least congested. A load balancer is nothing more than a reverse proxy, and this allows it to do all its magic.

Note: for the reverse proxy to work, it must be placed in specific places in the network and the real servers may need a special configuration. You can’t just plop a load balancer anywhere in the network and expect it to work. Of course, this is good! If it was otherwise, anyone could pretend to be a website, like your bank’s website. You can imagine the outcomes…

How to deploy a load balancer

So, we can’t plop our load balancer anywhere in the network. Where do we install it, then? When deploying load balancers, you have two options: with a single leg, or with two (or more) legs.

First of all, the load balancer must be close to the backend servers. This is not the case if you are doing geographical load balancing, but we won’t touch that part today. If you are simply distributing load, the load balancer should be in the same data center as servers. Furthermore, all servers that work together to offer a service (the ones being load balanced) should be in the same subnet. This, of course, is just a best practice, one worth following.

One or two legs?

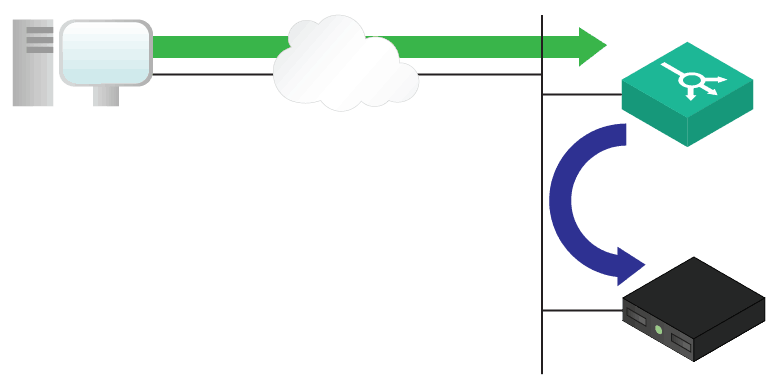

If you deploy your load balancer with one leg, it will have a single interface in just a network. This means that it will receive requests from clients on that interface, and it will contact the servers from that same interface. The major advantage of this setup is simplicity, but it poses security and scalability challenges. Since the load balancer is in the same network of the backend servers, the client may contact directly the backend servers. Furthermore, if you don’t make the load balancer doing NAT, the servers will send the request back directly to the client, not to the load balancer. This disrupts the whole process.

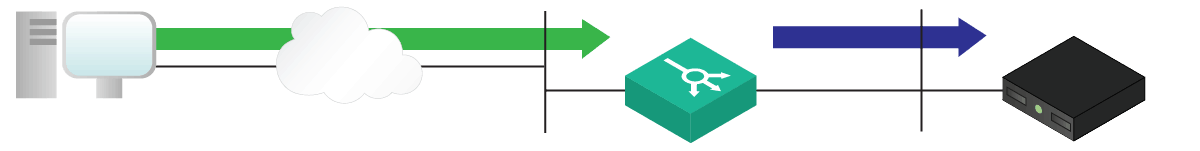

Having multiple legs is much better. In this setup, client can access the load balancer on an interface, and the load balancer uses other interfaces to contact backend servers. The backend subnets may have a default route to the load balancer, eliminating the need for NAT. However, the best practice is to always use NAT. While this setup is somewhat more advanced, it eliminates all the flaws we find in the one-leg setup. Much better!

The floating IPs

A load balancer is a special network device, not just another boring router. If we use NAT, as we mentioned previously, which kind of NAT do we do exactly? Load balancers generally perform just destination NAT. The client contacts the load balancer on a given IP address, and the load balancer changes that destination IP address to reflect the destination web servers. Okay, but what are the addresses a client will contact the load balancer on?

The majority of devices can have a single IP address in each network, but this is not the case with load balancers. In a load balancer, you can add as many IP addresses as you’d like on each network. Then, you can use each to expose a service. The client of a given service will reach the load balancer on a given address. You can then associate that address to a pool of servers to load balance. These IPs are floating, because they are on the load balancer but they do not represent the balancer itself, but the services it expose.

Fancy stuff on a load balancer

Now you know what is a load balancer, how does it work, and how to set it up. It is time to check out the cool stuff it can do for us.

Health Checks

What is the point of having a load balancer if it directs traffic to a dead server? None, of course, but load balancers don’t do that. They can have sophisticated health check systems to verify that backend servers are up and running. These checks go way beyond the simple ping.

A load balancer can make specific application requests, like HTTP or FTP, and analyze the response to see if the service is working as expected. A common check is to verify that the TCP port, like 80 for HTTP, is open. When you configure a pool of servers for load balancing, enabling at least a simple health check is important to have a reliable service.

Load balancing

Guess what, a load balancer can do load balancing! To send requests to the least congested server, a load balancer can use several techniques. It can contact the longest idle server (the one not serving requests for the longest time). Much better, it can contact the server with the least number of active requests. The load balancer knows this information because all requests pass through the load balancer itself.

Simpler techniques of load balancing are circular or contacting always the first server until it fails.

Custom routing of requests

Since the load balancer is a reverse proxy, it can inspect requests coming from clients. As a result, it can use the data in the request to distribute them intelligently. For example, you can have a multi-language website where each language as a path, like example.com/en-us/ for English and example.com/it-it/ for Italian.

The load balancer can look at the path requested, and based on that distribute traffic among the servers. As a result, you can each server for each language, looking like a single server from the outside.

SSL Offload

SSL is mandatory on web traffic and is at the foundation of HTTPS. For a server to expose a website over HTTPS, the server must deal with encryption and certificates. This can be a CPU-intensive job we may want to avoid. To do that, we can install the SSL certificate on the load balancer and make it expose the service in HTTPS. Then, the load balancer will contact the internal servers using HTTP.

Another benefit of that is having all SSL certificates in the same place, managed by the same administrators. This helps facilitate the renewal process of SSL certificates, as you know where they are installed.

A load balancer may even do the opposite: remove the SSL and expose HTTPS-only servers in HTTP. This may be useful only under special circumstances.

Persistency

Another common feature of websites is using cookies. However, if a web server keeps track of your cookies, the other web servers won’t have that information. If the load balancer sends your next request to another server we might have session problems.

With persistency, the load balancer can look at your IP or even at cookie and send you always to the same server. This way, you will end up on the server that knows your identity, not having to authenticate every time.

Easing planned maintenance

A big company exposes services 24/7. At some point, however, even the biggest enterprise will need to turn off some servers to do some tweaks. A load balancer can help you in that, because you can tell it to exclude some servers from the pool. This way, such servers won’t serve any request and you will be able to work with them with no worry.

The load balancer allows you to do it in a very graceful manner. In fact, it won’t brutally terminate active sessions to the server you are removing from the pool. Instead, it will simply not send new requests to that server, and wait for existing requests to be completed.

Wrapping it up

Load balancer allows you to expose safely many services in the data center. They enable scalability, security, and ease of management, thanks to their role as reverse proxy. If you want a load balancer, the biggest player in the industry is currently F5. If you go for virtual, Citrix NetScaler is a great solution. Other vendors like Cisco has their products as well, but they still lag behind.

What do you think of load balancers? How do you see them in your infrastructure? Let me know your opinion in the comments!