We all know for a fact that computers process huge loads of information uninterruptedly. How is this possible, exactly? At the very foundation of computing, we have binary math, a way to store and process data using electromagnetic signals. In this article, we will find out how the brain of a computer works, discovering all the secrets of the binary math. By understanding these concepts you will truly understand computing but, more than that, you will become a pro of networking because binary math is a requirement to understand subnetting, a must-have skill for any networker.

Information as a state

We – as humans – can have a lot of memory, and our brain is so efficient in giving us what we want to know at the right time. We store a lot of information in our brains in the form of images, words, and feelings so that we can access them later. This is where your own knowledge resides, but what about a computer? It is pretty obvious that a computer cannot store information in the form of feelings, but how do they do it then? For a non-technical person, a computer may seem to have much more memory and “reasoning skills” than an average person (computers beat humans all the time playing chess since 1996). The truth is that computers are just machines, with a given set of storage and computational power. That is, they cannot go further than that. But how do they process information and store it, within their limit? They use binary numbers. To understand what binary numbers are, we need to address two major issues first. An issue is a bit more logic and related to functionalities: a computer must be deterministic, it can process the same data set more and more times by getting always the same result. There is no space for free interpretation, in computing, everything has to be black or white. The other issue is much more practical, and it is the fact that our computer runs with electricity and we have to use that electricity to represent information. So we have two options: if there is electricity, then it’s white, if not, then it’s black.

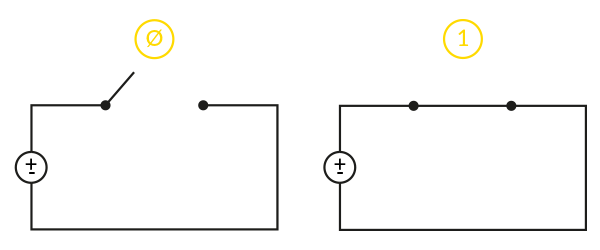

Imagine that a computer is made of a set of electric switches (or, at least, we can think of it this way), as in the picture below. The whole circuit can be in two states: it is either powered on or powered off. You can guess, what is powered on represents a 1 (one), while what is powered off represents a 0 (zero). Each switch can represent a zero or a one and can transition between these two states, but without intermediary steps (0.5 does not exist, we have just zero and one). This is because we are working on such a small scale inside a computer that we are able to detect only if there is electricity or if there isn’t, but not to measure exactly how much electricity is flowing in the circuit.

With a switch representing either zero or one, we can just represent two items. But if we use two switches at the same time, we can represent four items because we have four different combinations (both off, both on, first on and second off, first off and second on). We can represent information bigger and bigger the more we add switches into the picture. In the end, the information is a “picture” of all the switches in their current state. By changing the value of a switch, you might end up changing the whole meaning of the i

Information. Each time you add a switch into the picture, you double all the possible combinations. The idea that information is like a picture represents what information exactly is when it comes to processing information. The CPU (Central Processing Unit) of a computer is what do all the “thinking” and it works with a clock, which is nothing more than a signal that tells the CPU “do that thinking right now!”, then it pauses for an instant and do it again, and again, and again. When the CPU receives that order, it read (takes the picture) of the current state and modifies it. Those modifications will be the new current state for the next time. Everything happens in fractions of a microsecond.

In a computer, we cannot have a person turning the switch on, then turning it off, then turning it on again. Even more, if that has to be done in fractions of a microsecond. Instead, some specific circuits are used to represent a zero or a one. Switches are replaced by “cells”, where a cell can contain only a zero or a one. The concept of a cell is implemented differently depending on needs: if we want instant-access but we do not want to maintain the cell state without electricity we would use logic circuits, if we want persistency after power outage we would write it to a Hard Disk and so on. We won’t explore these concepts in this article, but we will focus on the concept of the cell. No matter what technology is running below, that cell is called bit and represent the smallest piece of information in IT:

A bit the smallest piece of information in computing. It is a “cell” that can have two values (one at a time): zero or one.

However, a single bit contains almost nothing. It represents two items, it’s such a small unit of information. More than that, it is not easy to be processed (computers do not work one bit at a time). So, as a convention, we defined the byte. A byte is nothing more than 8 sequential bits, so you will have a total of 256 different combinations in a byte. A “word” (technical term, not a set of letters with meaning) is two bytes, or 16 bits (more than 65,000 combinations), while a double word (or simply “double”) is twice a word, four bytes or 32 bits (more than 4 billion combinations). Another technical term is the nibble, which is not so popular, and represents half a byte, 4 bits (16 combinations). The most important of these units is the byte. Everything work with multiples of bytes. The most used multiples of the byte you probably already know are the following.

- Kilobyte (KB) – 1,000 bytes

- Megabyte (MB) – 1,000,000 bytes or 1,000 KB

- Gigabyte (GB) – 1,000,000,000 or 1,000,000 KB, or 1,000 KB

- Terabyte (TB) – 1,000 GB

We also have the Petabyte (PB), which is 1,000 TB, then the Exabyte (EB), the Zettabyte (ZB) and the Yottabyte (YB). Each is, as you might have guessed, one thousand times the previous one.

A computer is able to process several bytes at the same time, in a fraction of a microsecond. It has to process more data at the same time (therefore a single bit won’t suffice) due to visibility issues. Imagine that you have to read a word, but you can read one letter at a time and each time you read a letter you forget about the previous one. If you get back to a letter, you won’t remember the current one. In other words, to read the whole word you have to read all the letters at the same time. The concept is very similar.

Another important thing to notice, if it wasn’t obvious enough, is that computers also communicate using binary numbers. They pass binary numbers between each other all the time, it’s the only thing they can understand. When a computer sends binary numbers in a wire, these numbers are sent in a row, one next to the other, like the wagons of a train. This is because on the other end of the cable, the other computer will measure if there is current at a given time, so in a single instant only a single bit can be processed. Therefore, while storage and processing units are multiples of bytes (because we have the opportunity to work in a parallel manner), data transfer units are multiples of the bit. We have the Kilobit (Kb), the Megabit (Mb), the Gigabit (Gb), and such, every 1,024 times the previous one. As you can see, “b” is lowercase. This is because, technically, b is the symbol for a bit, while B is the symbol for byte. To put it another way, a KB is 8 times bigger than a Kb, because a byte (B) is eight times bigger than a bit (b).

Now that we laid a solid background about what a bit is and what are its multiples, disclosing all the needs that cause computing to require binary math, we are ready to face the binary math itself!

Binary math

We are finally here. We already know that binary math is the system used by computers to think, elaborate and store information, and to communicate. In the end, you will find out that binary math is not so different from our “normal” math, because the concepts behind are all the same. If you think for a minute, we can also imagine that our math is composed of cells. However, each cell can contain 10 different values (0 through 9) instead of just two. If in binary math, you add a cell, you double the possible combinations, while in traditional math if you add a cell you multiply by then the possible combinations. Our “normal” math is known as decimal, indeed. Before getting deeper into operations that are specific to binary math, let’s explain how to convert back and forth from decimal numbers to binary numbers.

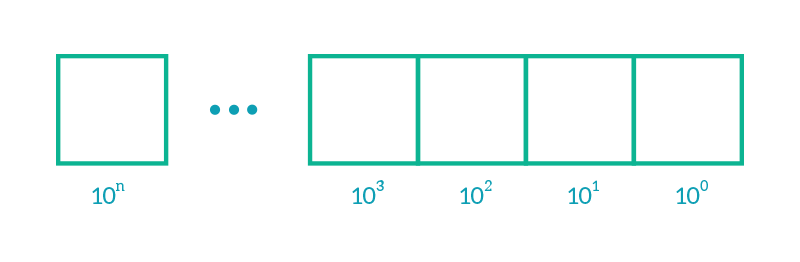

Often times, we are too used to our numeric system that we do not even know how it actually works. So, take this as a reminder. In our system, each cell represents a power of 10, the lower to the right, and the higher to the left. The digit contained in that cell means “how many times this power of 10 is present in that number?”. Let me clarify. If we start from the right cell, we have 100, which equals to 1. This mean “How many ones are in this number?” So, if you write 9 in that cell it will mean that you will have “nine ones”, which in turn is nine. The 100 value is present nine times.

If the first power on the right is 100, as we go to the left we will find 101, 102, 103, 104, and so on. In order to find a value of a number, we can find out how many times each power is repeated and then sum all the results (12 is made of 1×10 – 101, and 2×1 – 100, for example). The following image gives you some examples.

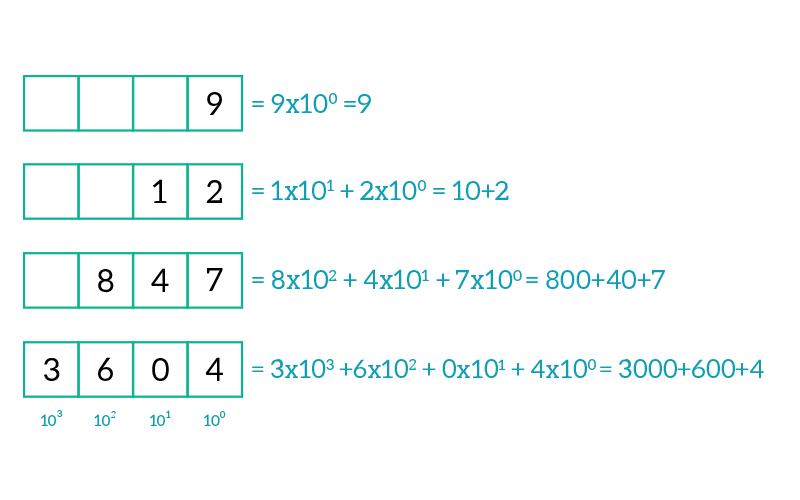

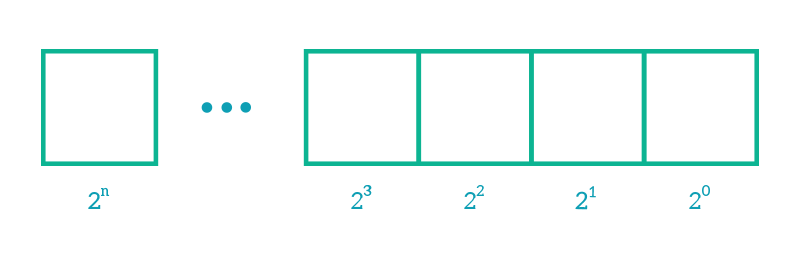

You might ask, why the power of ten? Couldn’t it be the power of 9, or 8, or whatever? It is a good question, and the answer is relatively simple. For the decimal system, they must be powers of 10 because in every single digit (cell) we can fit 10 different values (0 through 9). Therefore, if we have two cells we have one hundred possible combinations (for each digit on the left cell, you have to use 10 different digits on the right cell, resulting in 10×10=100). What power should be used if in a single cell we can fit only two values? You got it, in binary math, we are going to work with the power of two instead of the power of ten. The concept is always the same, we start with 20 (which is still equal to 1) at the far right, then we encounter 21 (which is 2), 22 (which is 4), 23 (which is 8), and so on. If you want to find out which is the value of each cell, just count its position starting from zero on the right and get the related power of two (a cell in the position x will have a value of 2x).

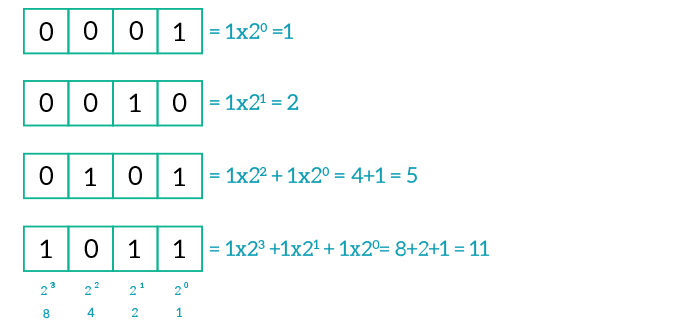

So, converting a binary number into a decimal one is really easy. You just multiply the content of a cell with the value of the cell position. If in the cell on the right you have 1, you will multiply 1×20, resulting in 1. If instead, you have zero, it will result in a zero. If you have multiple cells, sum the value of each cell flagged with one and you’re done. Look at the following examples.

Now, for simplicity we used only nibbles (4 bits, or 4 cells), but in the reality, you are going to work with bytes, so it is best that you know the power of two at least up to 8 very well. You have to remember them and be quick in conversions. Let me give you a refresh: 20 equals 1, 21 equals 2, 22 equals 4, 23 equals 8, 24 equals 16, 25 equals 32, 26 equals 64, 27 equals 128, and 28 equals 256. Now, remember this important concept: by having N cells, you can represent up to 2N combinations. Instead, the Nth cell will have a value (alone) of 2N-1. So, in a byte, you can fit 256 combinations (28), but the eighth bit on the left of the bit, if it contains 1, will have a value of 128 (27). Then, if you set all the bits to one and do the sum, you will end up with 255, the last combination (from 0 to 255 you have 256 combinations).

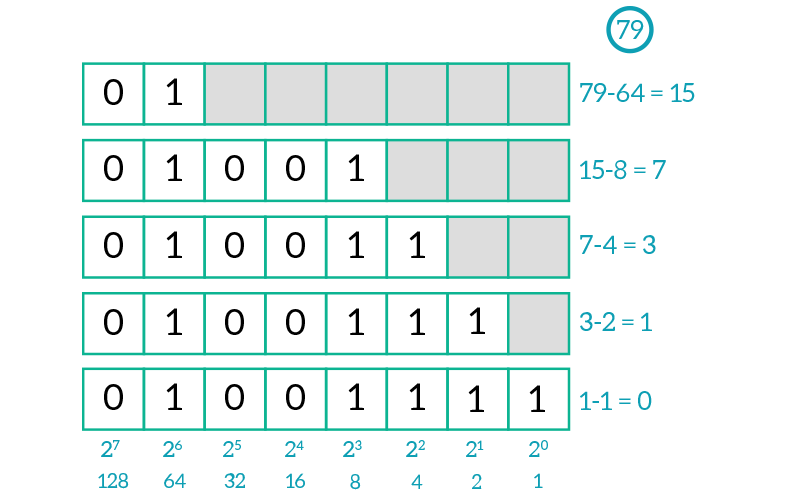

Before moving to the opposite process, try to convert a binary number you made up. Once you feel confident about converting from binary numbers to decimal numbers, you can move to the next step: converting decimal numbers into binary numbers. Again, you consider the powers of two, starting on the right. For each position you encounter, ask if the number is bigger than the power you are considering. If so, move to the next power. You want to find out the biggest possible power of two which is contained in your number. Let’s say you want to convert 79, then you are looking for 26 (64) because 128 would be not be contained in 79. Then, you subtract that power from the number (79-64=15) and you repeat the process with what you get (in this case, we are looking for 23 8, because 16 is too big). Repeat the process until you remain with a zero. The following picture will make that clearer.

Now that you understand what binary numbers are and how they work, I suggest you practice a few minutes with them before moving to the operations that we can do with binary numbers. Before doing that, I’d like to share two tricks with you. The more you practice, the more you will start to learn some binary numbers so that you do not need to convert them each time. These two considerations may help you a lot.

- Odd numbers have always the last bit flagged to one

- The number right before a perfect power (like 31 is for 32) is made of all the bits smaller than the given power, flagged to one. For example, 8 is

1000and 7 is0111, 32 is10000and 31 is01111

Now, we are ready to focus on the binary math we are going to need.

Binary math for networkers

Binary numbers are popular topics in all ICT faculties, and even if concepts remain in your mind, they are not needed for most of the ICT jobs. One of the few jobs where they come very handy is computer networking. In this section we will focus on the operation you have to be very good at, in order to be a successful technician.

Binary numbers are just a way of representing a value, just as decimal numbers. The value does not change, what changes is how you represent it (with zero and one, instead of ten digits). This means that you can do any operation on binary numbers, such as additions, substractions, divisions, powers, and so on. These are all great things, and computers need to do them all the time. They do them all the time in order to display on your screen text or video, in order to process your data or to run the artificial intelligence in a game. However, this is far beyond the scope of this article, because these are not the operations needed by a network engineer. What you do need to know, instead, are bitwise operations. These types of operations are specific for binary numbers. Fortunately, they are quite simple to understand. Look at the following picture, you have a representation of the circuit symbol (logical gate), the operation name, and how it works.

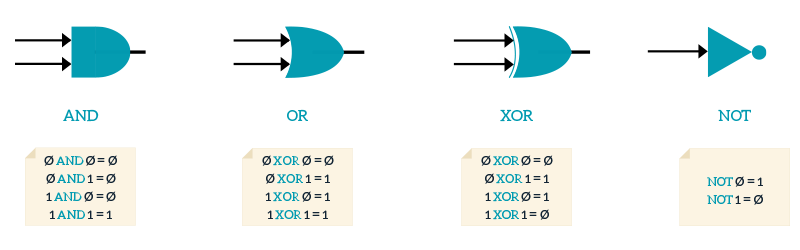

These strange symbols are logical gate, a way of representing a specific part of an electronic circuit. You have two signals coming from the left side (two channels), that are elaborated somehow within the symbol and that output a single signal, the combination of the previous two. How these two signals are combined is decided by the logical gate. In order to work with these bitwise operators, you can think of the values zero and one like false or true. This is the boolean math, a way of processing binary numbers like they are a representation of a condition, with that condition being either true or false. Obviously, one means true and zero means false. Given that, we can explain these operators.

- AND – it works with two values and they both have to be true in order for the result to be true. Any other case returns false. You can think of it this way: Both first value AND second value must be true for the result to be true.

- OR – it works with two values, the result is true if at least one of them is true (it does not matter which one). If they are both true, the result is still true: If the first value OR the second value is true, then the result will be true.

- XOR – it works with two values and it means “exclusive or”. For the result to be true, one of the two values must be true, but not both: If one of the two values is true, and the other is necessarily false, the result will be true.

- NOT – it works with one value, the result is the opposite of that value: If the input value is false, the result will be true.

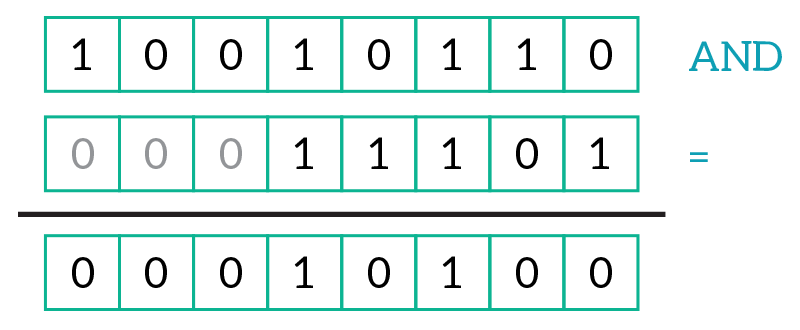

These are the main operations you are going to work on in networking when it comes to binary math, with the “AND” operator being the most widely used and needed. However, with the previous explanation, you are able to work with bitwise operators only on a single bit pair (for example 1 AND 0 = 0, 1 OR 0 = 1 and such), but in the real world, you are going to do it with whole bytes. Fortunately, this is simpler than you might think. These operators work only with a pair of values, so you will have to work with two inputs at a time. What you have to do is to take the two bytes (or nibbles, words, doubles… whatever) and put them in two aligned rows so that the first bit on the first value is exactly on top of the first bit of the second value and so on. In this process, always start from the right and if one number is shorter (has fewer bits), add extra zeros on the left as you need them. Once you have the two numbers aligned one over the other, make the bitwise operation per column and write the resulting at the bottom of the same column. Do that from right to left and boom, you’re done. The following picture is a clear representation of that.

Once you get these concepts, is time to do some practice. Get used to work with binary operators, AND mainly. Once you feel confident with this kind of math, you’ll be ready to dive in the deepness of true networking. The following section will present you what you can do with all these zeroes and ones.

Why binary math is so crucial

All that binary math and logic may seem weird at a first glance, and somehow it is. Many people crushing their brain into it find a hard time understanding it, so they start asking “Why is all of that needed? Couldn’t we work with decimal numbers as always?” and these are both good questions. If you were to work in any different field of IT, such as programming or business intelligence, you could survive up to a certain level without even knowing what binary math is. Personally, I learnt programming without even knowing the existence of binary numbers. Even in these jobs, however, a good knowledge of binary numbers will help you make the difference. For network engineers the thing is different: you are going to need binary math in order to do your job. This is a fact, you cannot survive without it. Here are some tasks that you are able to do only if you know binary math.

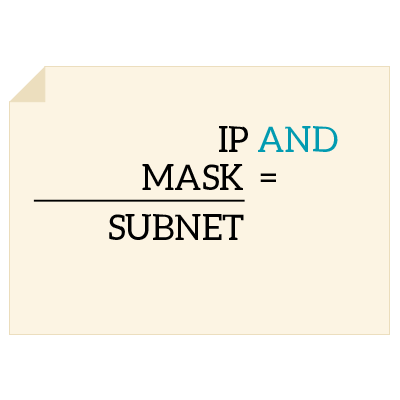

- Developing an addressing scheme – In order to design even a small network, you have to know which device is going to get which address, and in order to do that you are going to do subnetting, which involves almost only bitwise AND operations between double words

- Troubleshooting traffic path – Intermediary devices work with binary numbers all the time in order to decide the path your data will flow on to reach the destination, you have to be able to identify where a device is going to send the information to

- Identify networks to apply policies – If you work with a network firewall (security appliance), you want to identify who is the source of information and decide if he is allowed or not to get it – for that you need binary math

- Advanced troubleshooting – at a pro-level, you are going to watch for some bits within the data sent, evaluate the data-link layer checksum (a field used to validate data) and other advanced stuffs that require binary math

This list can continue with many other voices, but it is just to give you an idea. Without the first three things, you just can’t be a network engineer.

More than that, binary math is crucial to understand hexadecimal math (based on powers of 16 rather than on 2), which is used in the newest protocol for network addressing. Take your time to make these concepts yours, because if you know them very well you will truly make the difference.